Here's a simple piece of c-code (try zipped version) for testing how to parallelize code with OpenMP. It compiles with

gcc -fopenmp -lm otest.c

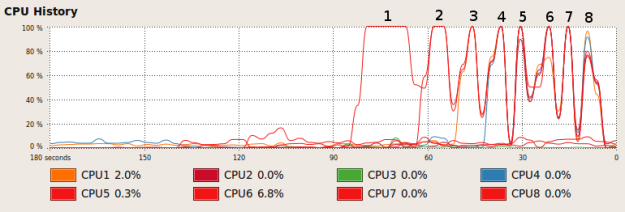

The CPU-load while running looks like this:

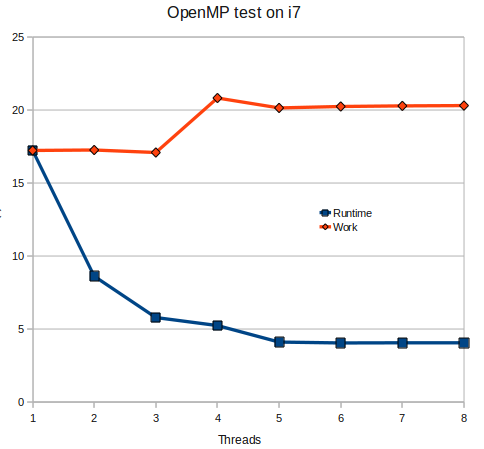

Looks like two logical CPUs never get used (two low lines beyond "5" in the chart). It outputs some timing information:

running with 1 threads: runtime = 17.236827 s clock=17.230000

running with 2 threads: runtime = 8.624231 s clock=17.260000

running with 3 threads: runtime = 5.791805 s clock=17.090000

running with 4 threads: runtime = 5.241023 s clock=20.820000

running with 5 threads: runtime = 4.107738 s clock=20.139999

running with 6 threads: runtime = 4.045839 s clock=20.240000

running with 7 threads: runtime = 4.056122 s clock=20.280001

running with 8 threads: runtime = 4.062750 s clock=20.299999

which can be plotted like this:

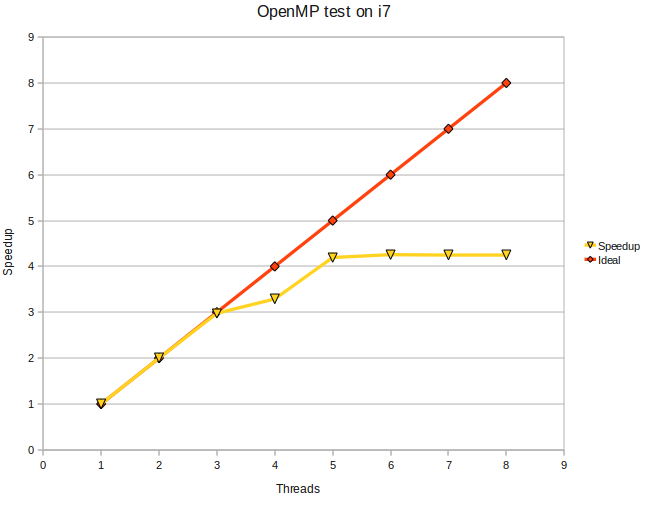

I'm measuring the clock-cycles spent by the program using clock(), which I hope is some kind of measure of how much work is performed. Note how the amount of work increases due to overheads related to creating threads and communication between them. Another plot shows the speedup:

The i7 uses Hyper Threading to present 8 logical CPUs to the system with only 4 physical cores. Anyone care to run this on a real 8-core machine ? 🙂

Next stop is getting this to work from a Boost Python extension.

on a Q9300 the results look like this:

running with 1 threads: runtime = 27.132321 s clock=26.980000

running with 2 threads: runtime = 13.214080 s clock=26.270000

running with 3 threads: runtime = 8.820247 s clock=26.049999

running with 4 threads: runtime = 7.251426 s clock=26.010000

running with 5 threads: runtime = 7.349411 s clock=26.330000

running with 6 threads: runtime = 6.725370 s clock=26.219999

running with 7 threads: runtime = 6.689425 s clock=26.150000

running with 8 threads: runtime = 6.897340 s clock=25.959999

The office has a quad Intel(R) Xeon(R) CPU X7350 @ 2.93GHz (16 total cores, no HT) running 64-bit Ubuntu 8.04. On it, I measure a speedup of 8.00 using 8 threads and 15.91 using 16 threads.

1 thread runtime: 21.69 cpu time: 21.69.

16 thread runtime: 1.36 cpu time: 21.72.

Thanks for the test Jeff. This trivially parallel code seems to scale well.

A friend of mine did some testing on Windows with VS2008 and found the program to run much faster. Can the gcc implementation of OpenMP really be that bad? Comparing gcc with and without OpenMP also leads to confusing results:

gcc -lm -O3 -o otest otest.c

./otest

running with 1 threads: runtime = 4.988194 s clock=4.970000

running with 2 threads: runtime = 4.983637 s clock=4.970000

gcc -lm -O3 -o otest otest.c -fopenmp

./otest

running with 1 threads: runtime = 27.958443 s clock=27.959999

running with 2 threads: runtime = 14.037088 s clock=28.030001

very strange.

I played with the code some more too. By now my test code is fairly different from yours, so I've put a copy at http://media.unpythonic.net/emergent-files/sandbox/otest.c

I did not find a big difference in performance by turning off -fopenmp, but I did find that specifying -fno-math-errno provided a huge speedup with and without -fopenmp (base runtime is a bit higher probably because I changed the matrix size to be 128000 instead of 10000):

$ gcc -std=c99 -fopenmp -O3 otest.c -lm && ./a.out

#threads wall cpu sum-of-c

1 28.975817 28.970000 7659783.500000

2 14.487500 28.950000 7659783.500000

4 7.255789 28.960000 7659783.500000

8 3.652288 29.020000 7659783.500000

16 1.927230 28.990000 7659783.500000

$ gcc -std=c99 -fopenmp -fno-math-errno -O3 otest.c -lm && ./a.out

1 5.955010 5.960000 7659783.500000

2 2.977407 5.950000 7659783.500000

4 1.494880 5.950000 7659783.500000

8 0.760135 5.910000 7659783.500000

16 0.411194 5.970000 7659783.500000

$ gcc -std=c99 -O3 otest.c -lm && ./a.out

1 28.970354 28.970000 7659783.500000

$ gcc -std=c99 -O3 otest.c -fno-math-errno -lm && ./a.out

1 5.952739 5.950000 7659783.500000

I tested original code with dual 2.0Ghz Opteron under Ubuntu 9.04 and -fno-math-errno made only small difference but with Jeff's version there was

huge speedup.

Original version:

jamse@jamse-desktop:~$ gcc -fopenmp -lm -O3 -o otest otest.c

jamse@jamse-desktop:~$ ./otest

running with 1 threads: runtime = 28.173516 s clock=28.020000

running with 2 threads: runtime = 14.892576 s clock=28.200001

jamse@jamse-desktop:~$ gcc -fno-math-errno -fopenmp -lm -O3 -o otest otest.cjamse@jamse-desktop:~$ ./otest

running with 1 threads: runtime = 27.736684 s clock=27.480000

running with 2 threads: runtime = 13.913317 s clock=27.450001

Jeff's version:

jamse@jamse-desktop:~$ gcc -std=c99 -fopenmp -lm -O3 -o otest2 otest2.cjamse@jamse-desktop:~$ ./otest2

1 36.001104 35.940000 7659783.500000

2 18.074438 35.860000 7659783.500000

jamse@jamse-desktop:~$ gcc -std=c99 -fno-math-errno -fopenmp -lm -O3 -o otest2 otest2.c

jamse@jamse-desktop:~$ ./otest2

1 3.425272 3.410000 7659783.500000

2 1.720572 3.430000 7659783.500000

I tested the original code on my dual socket Intel E520 @ 2.85Ghz:

twebb@saraswati:~/tmp/tmp2%gcc -fopenmp -lm -O3 otest.c

twebb@saraswati:~/tmp/tmp2%./a.out

running with 1 threads: runtime = 14.035480 s clock=14.020000

running with 2 threads: runtime = 7.038971 s clock=14.080000

running with 3 threads: runtime = 4.702512 s clock=14.070000

running with 4 threads: runtime = 3.524094 s clock=14.050000

running with 5 threads: runtime = 2.891979 s clock=14.030000

running with 6 threads: runtime = 2.400413 s clock=13.970000

running with 7 threads: runtime = 2.059968 s clock=14.060000

running with 8 threads: runtime = 1.781473 s clock=14.100000